前言

在构建 Kubernetes 多 Master 集群时,实现负载均衡是至关重要的一环。通过多台 Master 节点配合使用 Nginx 和 Keepalived 等工具,可以有效提高集群的可靠性和稳定性,确保系统能够高效运行并有效应对故障。接下来将介绍如何配置这些组件。

接上篇:二进制部署Kubernetes集群——单Master和Node组件-CSDN博客

目录

2. 修改配置文件 kube-apiserver 中的 IP

4.1 修改 bootstrap.kubeconfig 和 kubelet.kubeconfig 配置

4.2 重启 kubelet 和 kube-proxy 服务

4. 创建 service account 并绑定默认 cluster-admin 管理员集群角色

一、环境配置

1. 集群节点配置

机器内存有限,按需自行分配:

| 节点名称 | IP | 部署组件 | 配置 |

| k8s集群master01 | 192.168.190.100 | kube-apiserver kube-controller-manager kube-scheduler | 2C/3G |

| k8s集群master02 | 192.168.190.101 | kube-apiserver kube-controller-manager kube-scheduler | 2C/2G |

| k8s集群node01 | 192.168.190.102 | kubelet kube-proxy docker | 2C/2G |

| k8s集群node02 | 192.168.190.103 | kubelet kube-proxy docker | 2C/2G |

| etcd集群etcd01 | 192.168.190.100 | etcd | |

| etcd集群etcd02 | 192.168.190.102 | etcd | |

| etcd集群etcd03 | 192.168.190.103 | etcd | |

| 负载均衡nkmaster |

192.168.190.104 | nginx+keepalive01 | 1C/1G |

| 负载均衡nkbackup | 192.168.190.105 | nginx+keepalive02 | 1C/1G |

2. 操作系统初始化配置

新增节点设备做一下配置:

① 关闭防火墙及其他规则

所有节点操作:

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

# 清空iptables防火墙的规则,包括过滤规则、NAT规则、Mangle规则以及自定义链

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config② 关闭 swap

所有节点操作:

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 在/etc/fstab文件中找到包含swap的行,并在这些行之前加上#,从而注释掉这些行③ 在 master01、master02 添加 hosts

cat >> /etc/hosts << EOF

192.168.190.100 master01

192.168.190.101 master02

192.168.190.102 node01

192.168.190.103 node02

EOF④ 调整内核参数

所有节点操作:

cat > /etc/sysctl.d/k8s.conf << EOF

#开启网桥模式,可将网桥的流量传递给iptables链

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#关闭ipv6协议

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

sysctl --system

# 重新加载内核参数设置⑤ 时间同步

所有机器均需要同步:

yum install ntpdate -y

ntpdate time.windows.com二、部署 master02 节点

1. 拷贝证书文件、master 组件

从 master01 节点上拷贝证书文件、各master组件的配置文件和服务管理文件到 master02 节点

[root@master01 ~]# scp -r /opt/etcd/ root@master02:/opt/

[root@master01 ~]# scp -r /opt/kubernetes/ root@master02:/opt/

[root@master01 ~]# scp -r /root/.kube root@master02:/root/

[root@master01 ~]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@master02:/usr/lib/systemd/system/

2. 修改配置文件 kube-apiserver 中的 IP

[root@master02 ~]# vim /opt/kubernetes/cfg/kube-apiserver

--etcd-servers=https://192.168.190.100:2379,https://192.168.190.102:2379,https://192.168.190.103:2379 \

--bind-address=192.168.190.101 \ # 修改为 master02 ip

--secure-port=6443 \

--advertise-address=192.168.190.101 \ # 修改为 master02 ip

3. 启动各服务并设置开机自启

[root@master02 ~]# systemctl enable --now kube-apiserver.service

[root@master02 ~]# systemctl status kube-apiserver.service

Active: active (running) since 三 2024-05-15 17:09:29 CST; 19s ago

[root@master02 ~]# systemctl enable --now kube-controller-manager.service

[root@master02 ~]# systemctl status kube-controller-manager.service

Active: active (running) since 三 2024-05-15 17:10:56 CST; 9s ago

[root@master02 ~]# systemctl enable --now kube-scheduler.service

[root@master02 ~]# systemctl status kube-scheduler.service

Active: active (running) since 三 2024-05-15 17:12:12 CST; 8s ago

4. 查看 node 节点状态

[root@master02 ~]# ln -s /opt/kubernetes/bin/* /usr/local/bin/

[root@master02 ~]# kubectl get nodes

# 列出所有集群中的节点,包括节点的名称和状态信息

NAME STATUS ROLES AGE VERSION

192.168.190.102 Ready <none> 42h v1.20.11

192.168.190.103 Ready <none> 178m v1.20.11

[root@master02 ~]# kubectl get nodes -o wide

# -o=wide:输出额外信息;对于Pod,将输出Pod所在的Node名

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.190.102 Ready <none> 42h v1.20.11 192.168.190.102 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://26.1.2

192.168.190.103 Ready <none> 178m v1.20.11 192.168.190.103 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://26.1.2

此时在 master02 节点查到的 node 节点状态仅是从 etcd 查询到的信息,而此时 node 节点实际上并未与 master02 节点建立通信连接,因此需要使用一个 VIP 把 node 节点与 master 节点都关联起来。

三、部署负载均衡

配置 load balancer 集群双机热备负载均衡(nginx 实现负载均衡,keepalived 实现双机热备),在 nkmaster、nkbackup 节点上操作。

1. 配置 nginx 的官方 yum 源

cat > /etc/yum.repos.d/nginx.repo << 'EOF'

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

EOF2. 安装 nginx,修改配置文件

2.1 yum 安装

yum install nginx -y2.2 配置反向代理负载均衡

修改 nginx 配置文件,配置四层反向代理负载均衡,指定 k8s 群集两台 master 的节点 ip 和 6443 端口。

vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.190.100:6443;

server 192.168.190.101:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

http {

……2.3 启动 nginx 服务

nginx -t

systemctl enable --now nginx.service

netstat -natp | grep nginx # 查看已监听6443端口

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 2410/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2410/nginx: master3. 部署 keepalived 服务

3.1 yum 安装

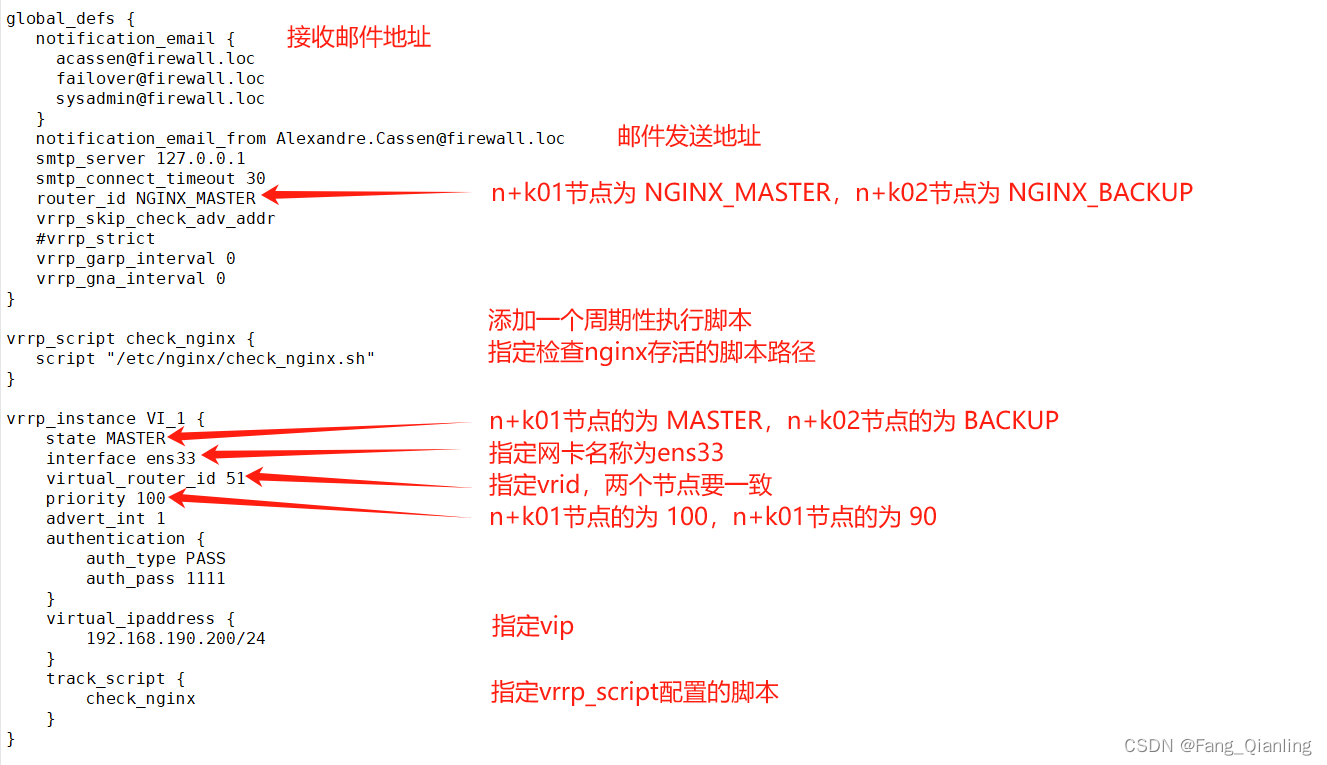

yum install keepalived -y3.2 修改配置文件

vim /etc/keepalived/keepalived.conf

3.3 创建 nginx 状态检查脚本

vim /etc/nginx/check_nginx.sh

#!/bin/bash

#egrep -cv "grep|$$" 用于过滤掉包含grep 或者 $$ 表示的当前Shell进程ID,即脚本运行的当前进程ID号

count=$(ps -ef | grep nginx | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

chmod +x /etc/nginx/check_nginx.sh3.4 启动 keepalived 服务

systemctl enable --now keepalived.service

[root@nkmaster ~]# ip a

inet 192.168.190.200/24 scope global secondary ens334. 修改 node 节点配置文件

修改node节点上的bootstrap.kubeconfig,kubelet.kubeconfig配置文件为VIP

4.1 修改 bootstrap.kubeconfig 和 kubelet.kubeconfig 配置

[root@node01 ~]# cd /opt/kubernetes/cfg/

[root@node01 cfg]# vim bootstrap.kubeconfig

server: https://192.168.190.200:6443

[root@node01 cfg]# vim kubelet.kubeconfig

server: https://192.168.190.200:6443

[root@node01 cfg]# vim kube-proxy.kubeconfig

server: https://192.168.190.200:6443

4.2 重启 kubelet 和 kube-proxy 服务

systemctl restart kubelet.service

systemctl restart kube-proxy.service5. 查看节点连接状态

在 nkmaster 上查看 nginx 和 node、master 节点的连接状态

[root@nkmaster ~]# netstat -natp | grep nginx

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 2410/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2410/nginx: master

tcp 0 0 192.168.190.200:6443 192.168.190.102:44658 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41016 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59136 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38484 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38472 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44642 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38494 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41020 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41014 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44650 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41012 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44644 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44626 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41042 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:41018 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59156 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59122 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44652 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59132 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38498 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38502 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.102:44646 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38488 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38480 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.200:6443 192.168.190.103:40996 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59144 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:59118 192.168.190.100:6443 ESTABLISHED 2411/nginx: worker

tcp 0 0 192.168.190.104:38514 192.168.190.101:6443 ESTABLISHED 2411/nginx: worker6. 测试创建 pod

在 master01 节点上操作

6.1 部署容器

[root@master01 ~]# kubectl run nginx --image=nginx

pod/nginx created

6.2 查看 Pod 的状态信息

[root@master01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 50s

[root@master01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 109s

# ContainerCreating:正在创建中

# Running:创建完成,运行中

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 2m26s 10.244.0.4 192.168.190.102 <none> <none>

# READY为1/1,表示这个Pod中有1个容器7. 访问页面

在对应网段的 node 节点上操作,可以直接使用浏览器或者 curl 命令访问

[root@node01 ~]# curl 10.244.0.48. 查看日志

在master01节点上查看nginx日志

[root@master01 ~]# kubectl logs nginx

10.244.0.1 - - [15/May/2024:10:41:13 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.29.0" "-"

四、部署 Dashboard

1. Dashboard 简介

仪表板是基于 Web 的 Kubernetes 用户界面,用于部署、故障排除和管理容器化应用程序和集群资源。通过仪表板,您可以概述应用程序状态、创建/修改 Kubernetes 资源,执行滚动更新、重启 Pod 等操作,并获取资源状态和错误信息。

2. 上传生成镜像包

上传 dashboard.tar 和 metrics-scraper.tar 至 node 节点 /opt/

2.1 分别在 node01、node02 节点生成镜像

[root@node01 opt]# docker load -i dashboard.tar

69e42300d7b5: Loading layer 224.6MB/224.6MB

Loaded image: kubernetesui/dashboard:v2.0.0

[root@node01 opt]# docker load -i metrics-scraper.tar

57757cd7bb95: Loading layer 238.6kB/238.6kB

14f2e8fb1e35: Loading layer 36.7MB/36.7MB

52b345e4c8e0: Loading layer 2.048kB/2.048kB

Loaded image: kubernetesui/metrics-scraper:v1.0.4

[root@node01 opt]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest e784f4560448 11 days ago 188MB

quay.io/coreos/flannel v0.14.0 8522d622299c 2 years ago 67.9MB

k8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 3 years ago 45.2MB

kubernetesui/dashboard v2.0.0 8b32422733b3 4 years ago 222MB

kubernetesui/metrics-scraper v1.0.4 86262685d9ab 4 years ago 36.9MB

busybox 1.28.4 8c811b4aec35 5 years ago 1.15MB

registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64 3.0 99e59f495ffa 8 years ago 747kB

3. 上传 recommended.yaml 文件

在 master01 节点上操作,上传 recommended.yaml 文件到 /opt/k8s 目录中

[root@master01 ~]# cd /opt/k8s/

[root@master01 k8s]# rz -E

rz waiting to receive.

[root@master01 k8s]# vim recommended.yaml

……

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001 # 添加

type: NodePort # 添加

……

[root@master01 k8s]# kubectl apply -f recommended.yaml

4. 创建 service account 并绑定默认 cluster-admin 管理员集群角色

[root@master01 k8s]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

# 在 kube-system 命名空间下创建了一个名为 dashboard-admin 的服务账户

# 主要用于给 Pod 中运行的应用程序提供对 API 服务器的访问凭证

[root@master01 k8s]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

# 创建了一个集群角色绑定 (ClusterRoleBinding),它将名为 cluster-admin 的集群角色绑定到了之前创建的服务账户 dashboard-admin 上,且该服务账户位于 kube-system 命名空间

# cluster-admin 是一个非常强大的角色,拥有对整个集群的完全访问权限。这意味着通过 dashboard-admin 服务账户运行的任何 Pod 都将拥有集群管理员权限。

[root@master01 k8s]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

# 列出 kube-system 命名空间下的所有 Secrets,并通过 awk 筛选出与 dashboard-admin 服务账户相关的 Secret 名称(通常服务账户会自动生成一个 Secret 来存储其访问令牌)

# 根据上一步得到的 Secret 名称,这个命令用来展示该 Secret 的详细信息

Name: dashboard-admin-token-zwbg5

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 50dbe025-dcfe-4954-b430-e3fb70393679

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlNhajQyX1pHSXBZSm1uOVRDc3ROSWpfWFlPNFRwaDAwT3lpeFljeldYM3cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tdGwybW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2JiNTc2MmUtNGMyOS00NjhkLTliM2EtYTUwYmU4NWZiNzhmIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.XqOPb-_MU-B3bh-JeYWbDMRGiG0VC5DWhNiZHXZGLy8Zwzt5IbbOCsrQdFg4SMQ4mQSd1bM3zd3X8QxiuhqFUdn8VmW2nABG0M0h8uI9RFZRQePJ4AOnVMXfgyZbqtuHqeDc3T4eX0-ZZvdkV3jc7bbYKf2byzC-pqnm73-XEk13RhNFahGGvt08AmzIUb6v6jiO85weKbrT3M_reKTarBv9SCaqo-fNms5UAfnOyGuPTObFQGqu6Tp21Gy1L4GsmGILM-IGvPRkqSgwc0cpjcFD9sJyG7-esrUvYOM_KPsTQn9G5rYRpYd4Ad40JLsKIBFJ7k72kLYHJV5l-_r0cQ

5. 使用输出的 token 登录 Dashboard

https://NodeIP:30001,建议使用360安全浏览器或者火狐浏览器

五、错误示例

1. 错误状态

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.190.102 NotReady <none> 2d12h v1.20.11

192.168.190.103 NotReady <none> 13h v1.20.11

# kubernetes 集群中有两个节点处于 NotReady 状态,无法接受新的 Pod 部署2. 错误原因

2.1 查看 kubelet 服务日志

节点 192.168.190.102 的 kubelet 服务无法更新节点状态,因为无法连接到 192.168.190.200:6443

[root@node01 ~]# journalctl -f -u kubelet.service

-- Logs begin at 一 2024-05-13 10:23:14 CST. --

5月 16 10:53:04 node01 kubelet[59283]: E0516 10:53:04.821047 59283 kubelet_node_status.go:470] Error updating node status, will retry: error getting node "192.168.190.102": Get "https://192.168.190.200:6443/api/v1/nodes/192.168.190.102?timeout=10s": dial tcp 192.168.190.200:6443: connect: no route to host

5月 16 10:53:07 node01 kubelet[59283]: E0516 10:53:07.825185 59283 kubelet_node_status.go:470] Error updating node status, will retry: error getting node "192.168.190.102": Get "https://192.168.190.200:6443/api/v1/nodes/192.168.190.102?timeout=10s": dial tcp 192.168.190.200:6443: connect: no route to host

5月 16 10:53:10 node01 kubelet[59283]: E0516 10:53:10.833419 59283 reflector.go:138] object-"kube-system"/"flannel-token-qsnr6": Failed to watch *v1.Secret: failed to list *v1.Secret: Get "https://192.168.190.200:6443/api/v1/namespaces/kube-system/secrets?fieldSelector=metadata.name%3Dflannel-token-qsnr6&resourceVersion=2727": dial tcp 192.168.190.200:6443: connect: no route to host

# journalctl: 是 Systemd 系统日志查询工具,用于查看和管理 systemd 初始化系统产生的日志。

# -f: 参数表示跟随模式,即在终端上持续显示新的日志条目,这对于监视服务的实时运行状况非常有用。

# -u kubelet.service: 指定查看 kubelet.service 这个 Systemd 单元的服务日志。kubelet 是 Kubernetes 的核心组件之一,负责在每个节点上运行容器,管理 pod 状态并与 Kubernetes 主控进行通信。2.2 检查 keepalived 服务 vip

由于虚拟环境挂起,导致服务无响应,vip 可能会消失。由于配置文件 bootstrap.kubeconfig、kubelet.kubeconfig、kube-proxy.kubeconfig 指向 vip,重启 keepalived 服务恢复。